|

탐색 건너뛰기 링크입니다.

|

|

Accuracy,Recall, F1 Score[Resources][AI Study]

- F1 score for machine learnng system [link] [excel]

- for imbalanced dataset : F1 score

- for balanced dataset : Accuracy,F1 score

- F1 Score, precison,recall '

- How to compute F1 score

- metrics of classifier performance for balanced data

- metrics of classifier performance for imbalanced data

- Data preparation [link]

- Precision and Recall for multi-class classification [link] [link]

- The accuracy (of model prediction)

- is the proportion or percentage of correcly predicted labels over all predictions

- bad problem : relatively 'high' accuracy with the model predicting the 'not so important' class labels fairly accurately

- The precision and recall for each class label

- are used to analyze the individual performance on class labels or average the values to get the overall precision and recall

- The (prediction ) precision (of the class X)

- how many instances were correctly predicted?

- instance X가 X로 예측된 수 / Number of prediction X

- The recall (of class X)

- instance X가 X로 예측된 수 / Number of all instance X

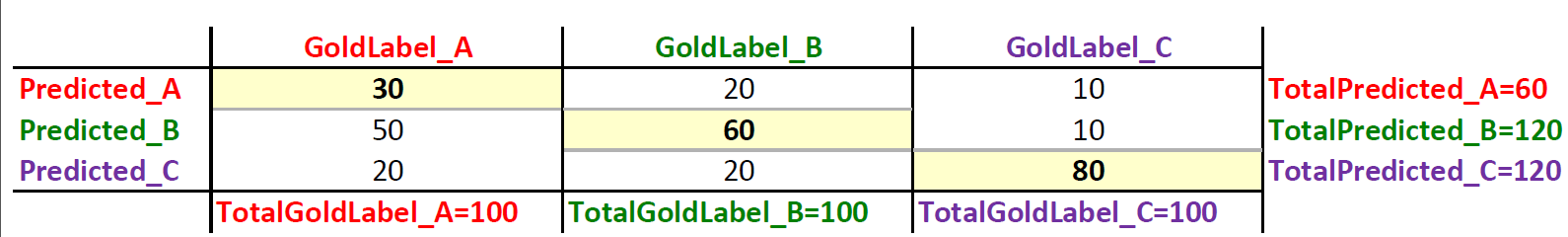

- example, confusion matrix - help interpretation

- precision=0.5 and recal=0.3 for label A

- precision for label A, 정밀도

- TP_A/(TP_A+FP_A)= TP_A / (Total predicted as A) = TP_A / TotlaPredicted_A = 30/60=0.5

- A라고 예측된 것 중에 50%만 정확하다.

- recall for label(class) A, 재현

- TP_A : instance A에 대하여 예측A(Positive)이 참(A)이다(T)

NF_A : instance A에 대하여 예측A가이닌것(B,C, Negative)가 A가아니다(F)

- TP_A / (TP_A + NF_A) = TP_A/(Total Gold for A) = TP_A/TotalGoldLabel_A = 30/100=0.3

- label A의 30%만 정확하게 예측된다.

- precision and recal for label B ?

- recall = TP_A/(TP_B + FN_B)=60/(60+20+20)=0.6

- precision = TP_B/(TP_B+FP_B) = 60/(60+50+10)=60/120=0.5

- the precision (exactness) and recall (completeness) of a model

- Accuracy Paradox Accuracy Paradox.

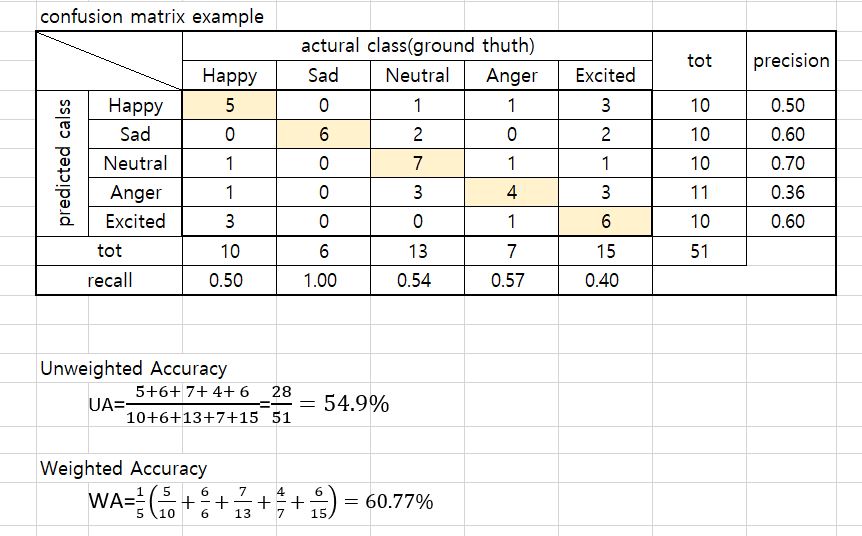

- Weighted Accuracy and Unweighted accuracy [link]

-

- therfore, for unblanced classes, WA is better

- Strategy to make dataset balanced

- Merge classes, i.e., weaker or smaller classes

- Reduce label space, i.e., consider juts happy, sad and nueral

- Drop extra data, e.g., we have hap # 15, sad # 20, and Neu # 70, then choose 15 each of hap,sad,neu.

-

LIST TOP

|